Introducing MXT-2: The Next Evolution in AI Video Indexing

Our latest AI model is trained on three times more data than its predecessor, making it even better at understanding what's in your videos to generate detailed and customizable descriptions.

For years, the solution to make large video libraries searchable was to add descriptive metadata such as a title, description and a few keywords to ingested video. But this was a manual task, tedious to say the least and impossible to scale with the amount of video being produced today.

Next came AI-powered video indexing tools, promising to automate the process. They tagged videos with faces, objects , and provided transcripts—but without context. They simply flooded media asset management (MAM) and digital asset management (DAM) systems with irrelevant metadata, making search results messy and creating more issues that they were initially promising to solve.

It’s why we launched MXT in late 2023—an AI that actually understands and describes video moments like a human. It breaks videos down into meaningful scenes, recognizing who’s in them, what’s happening, where it’s taking place, and even what kind of shots are used. It can also pull the best soundbites from interviews, speeches, or press conferences—so users don’t have to waste time scrubbing through footage.

Customers have used MXT to find and repurpose content from their video libraries seven times faster. And now, with MXT-2, we’re making video indexing even smarter and more accurate.

Improved Accuracy in MXT-2

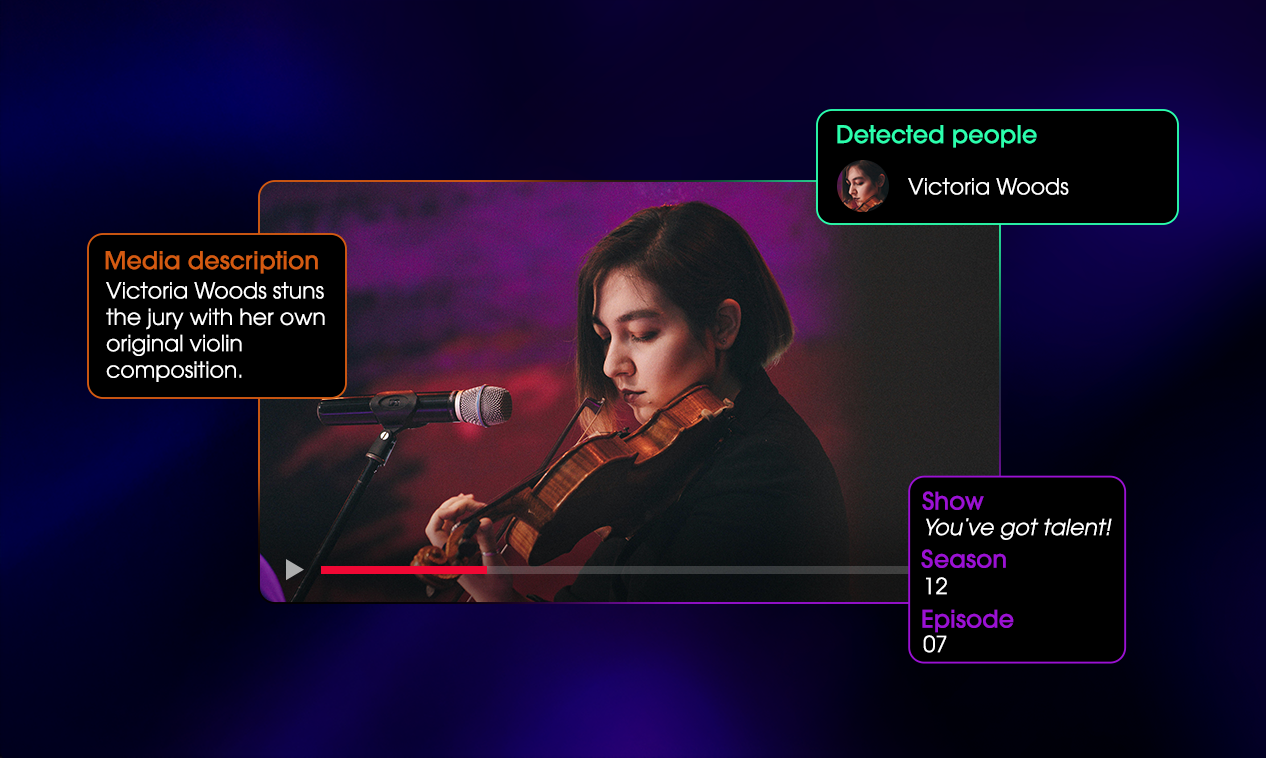

Most AI-powered video indexing tools rely on labels to make footage searchable. While tagging a clip with “dog,” “beach,” or “crowd” can be helpful, it doesn’t tell you what’s actually happening in a scene. Instead of just assigning generic tags, our technology generates rich, timecoded descriptions that explain each moment like a human would. This level of detail and context makes it much easier to find the exact moment you need, rather than sifting through hundreds of vaguely tagged clips.

And with MXT-2, the descriptions are now even smarter and more precise.

MXT-2 is trained on three times more data than our previous model, making it much better at describing what’s happening in a video. It’s capable of capturing far greater detail and nuance in video content.

To illustrate this improvement, consider the difference between how the previous model and the new MXT-2 model describe the same image.

Pinpointing the Best Highlights with Custom Moments

Another new addition to MXT-2 is a feature we’re calling Custom Moments. With Custom Moments you can now define exactly how you want a specific content type to be segmented—making it easier than ever to find specific moments at scale.

Instead of sifting through hours of footage, you can instantly pinpoint the clips that matter most for your project.

For example:

- A digital team working with past seasons of a cooking show can use Custom Moments to automatically highlight and describe each dish presentation to the judges. Logging details like the type of dish, the contestant’s ranking (winner, top three, or eliminated), enables them to create a searchable collection of the best vegetarian dishes across multiple seasons.

- A journalist can break down each aired newscast into individual story segments and tag them by pre-defined themes: major event, feel-good story, lifestyle news, economy, or even the weather report. This makes it easy to search and repurpose specific stories for later use.

.webp)

- An editor working on a trailer for a feature-length nature documentary can instantly surface the most visually striking scenes or key phrases, suggested based on the narrative of the program. Instead of manually combing through hours of footage, they can view key suggested moments such as breathtaking cinematography, or impactful quotes.

.webp)

- Instead of manually reviewing hours of racing footage, a sports editor covering a rally championship can quickly compile the most jaw-dropping moments of the season by setting Custom Moments to detect high-impact crashes, overtakes or near misses.

.webp)

Custom Moments ensure that the most relevant, high-impact content is instantly accessible, whatever story is being crafted, reducing manual edit time.

Automating Content Classification at Scale

Tagging and organizing video has always been a messy, inconsistent process. Despite some organizations trying to enforce naming conventions and taxonomy, some users still label clips one way, while others do it another way—leading to a confusing, disorganized mess.

That’s why with MXT-2, we’re introducing another feature called Custom Insights. Custom Insights will automatically classify video content with precision and consistency. By harnessing the metadata generated by MXT, Custom Insights can instantly categorize videos based on whatever parameter makes the most sense for you. Whether it's by topic, theme, content type, or something more specific, classification is no longer a manual burden.

It can also go even deeper. Take a boxing match, for example. You could ask Custom Insights to classify the video by:

- The weight class of the fighters

- Who won

- Which round the fight was decided in

- Whether the fight ended by knockout, points decision, or draw.

%20(1).webp)

With this level of insight, you could instantly search for Floyd Mayweather’s best knockouts within the first five rounds, making content discovery effortless.

This capability not only streamlines video organization but also makes exploratory searches far easier—whether you’re archiving footage, pulling clips for a project, or just trying to find the best moments in a massive video library.

Generating Text From Video Content

Custom Insights doesn’t just help you find content—it also helps you use it. As well as generating media level tags to help classify video, it can also be used to automatically generate any text you can use for publishing, or simply getting information about a video.

Here are just a few examples of how you can use Custom Insights to generate text from your video.

- Generate engaging captions, descriptions and hashtags on videos you want to publish to your social platforms.

- Craft descriptions for a program to be published to a streaming platform, without giving away any spoilers.

- Create match reports on key sports games.

- Provide insights on who is mentioned in a video, but not seen.

- Create reports for sporting events you want to publish on your website.

MXT-2 is set to transform how lean media teams discover and make the most of their extensive video content, speeding up production workflows and enhancing storytelling.

Organizations can search and discover MXT-2 indexed content using the Moments Lab video discovery platform, or integrate MXT-2 generated metadata into their current tool of choice.

As we continue to innovate and expand our AI technology, we remain dedicated to providing our clients with the tools they need to succeed.

Want to learn more about what success looks like with Moments Lab and MXT-2? Get in touch with us.

.png)